Using Artificial Grammar Limitations

Speech recognition is a valuable addition to any simulation training system, but only if implemented correctly. There are many considerations for what implemented correctly means. In this article, we will discuss the use of artificial grammar limitations e.g., state-based grammar, and why it is usually not a good method for improving accuracy. We will also explain why this method can be detrimental to simulator usability and effectiveness.

An ASR equipped air traffic control simulator, with a well-defined grammar, must match a spoken phrase with one of tens of thousands of supported phrases, with an incalculable number of phrase variations and permutations. There are several commercially available ASR systems that are simply not capable of processing large grammars. This may be because of slow decoding speed, memory usage, or arbitrary limitations in old code. It is unsurprising given the origins of ASR in telephone call centers.

Artificial grammar limitations are not a solution to poor native ASR Decoder accuracy. Weak acoustic models and ASR decoder configuration settings are the most likely reasons for decoder errors.

Grammar Limitation Examples

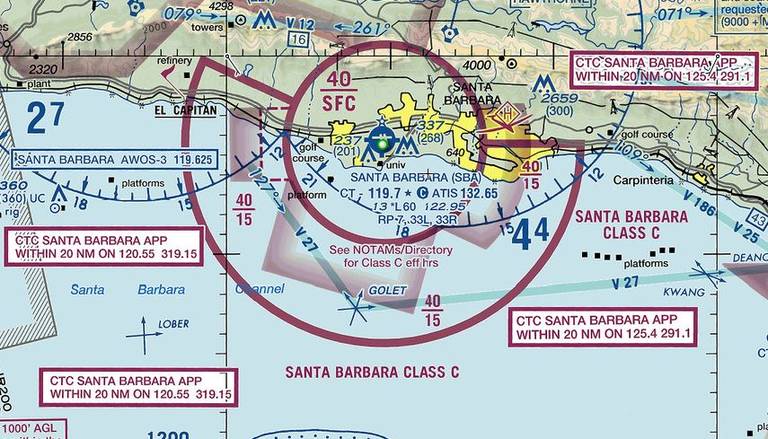

Artificial grammar limitations restrict the available search phrases. Limitations can utilize many criteria. Radio-frequency, aircraft flight state, geographical are common in aviation simulation. Why support the phrase “Turn left heading” if the frequency is for ground control operations The turn heading instruction is not used by a ground controller? State-based grammar limits the available grammar by the reported flight state.

The classifications serve a common purpose; reduce the phrases to search.

Why Avoid Grammar Limitation Methods?

The Verbyx ASR, VRX performs extremely well on very large grammars. It has no arbitrary limitations and is fast enough to cope with enormous search spaces with very high accuracy. For those ASR systems that can’t Verbyx would still recommend avoiding the restriction of available grammar.

An ASR will always find a match with a phrase defined in the grammar. For instance, if your grammar contains only Phrase One, Phrase Two, Phrase Three, and Phrase Four but a user speaks Phrase Five (unsupported), the ASR will return a match with one of the supported phrases. Phrase Four is likely to be the returned result, it is the most acoustically similar to the spoken phrase. This describes what often happens in a system that uses grammar limitations.

An ATC Example

In this example, the artificial grammar limitation is defined by the airspace sector An aircraft has a flight plan route Beacon A, Beacon E, Beacon Y, all in Sector One, followed by Beacon D and Beacon R in Sector Two. Sector One also contains Beacon Z but it is not part of the route.

The controller for Sector One has received from the Sector Two controller, direct routing for the aircraft to Beacon D (Dee). Having restricted the grammar by sector, only Beacon A, E, Y, and Z are valid for Sector One. The radio transmission “Callsign, proceed direct Beacon Dee” will return the closest match from only the available fixes in the grammar of Sector One, in this example Beacon Zee (American pronunciation).

The Result of Using Artificial Grammar Limitations

The aircraft will respond, proceed direct Beacon Zee, and change route. Increased student workload is often the result of passing invalid commands to the simulation. Given that the ASR returned the closest match from the active phrases, poor recognition accuracy cannot be blamed.

Tuning Your Grammar Limitations

A resolution to the routing problem might be to add the missing Beacon Dee to the Sector One grammar. This will work for that instance. Many years of experience let us confidently say, using this approach will become like a game of Whack-a-Mole. Every time you solve one problem another pops up. ATC is a complex environment with many variables. The permutations of grammar limitations are extensive and seem never-ending. Simulation vendors and customers may find limitation rules to be invalid due to different environments and operating procedures.

Using ASR Confidence Scores as Protection

When an ASR returns a result, it also returns a confidence score. If asked to return 4 results, Beacon Zee may have a confidence score of 80, with the remaining three results having lower but different scores.

Confidence scores provide some level of protection from invalid instructions. Invalid instructions if not blocked lead to serious usability issues and increased frustration for the user. However, finding a confidence score that provides an optimal balance between rejecting or accepting a recognition result is not a trivial issue. It is also not the most effective method of handling poor recognition.

Even when using confidence scores, there are additional complications. What feedback does the system provide to the user? If the simulated entity is passed an invalid command the response is often, say again. The user will repeat the radio transmission in an effort to resolve the problem. The rate of speech may slow or speed up. Improved enunciation is also attempted. The probability that the same misrecognition will occur is high. The user often cannot prevent the reoccurrence of the problem. In ATC the speed at which the traffic situation changes may mean it is already too late when the transmission is finally correct.

Have You Chosen the Correct ASR?

The function of an ASR is to translate spoken words into text form. The ASR result will be incorrect if the ASR does not have access to all possible phrases. Artificial grammar limitations will not improve accuracy, quite the opposite. A well-designed ASR will have the capacity to search ultra-large grammars, produce high levels of accuracy, and faster than real-time (batch recognition) or within as close to real-time as to not be noticeable during a human in the loop simulation.

If forced to use artificial grammar limitations due to ASR shortcomings, it might be time to revisit your ASR solution.

An Alternative Approach

Using an ASR that can effectively and accurately manage large grammars should be the first consideration. However, a capable ASR will produce improved accuracy if all of the supported phraseology is available to search. How an ASR deals with such a complex problem is beyond the scope of this post. However, before deciding on an ASR, there are reliable ways to measure the capabilities of your chosen technology. For more information download this free guide Writing Requirements for Speech Recognition

The Problem

If you are not in a position to change your ASR, consider adopting command validation.

An ASR that delivered 100% accuracy in a lab environment will return incorrect results in a representative user environment. Why might a 100% accurate ASR still result in usability issues? In addition to poor native recognition accuracy, we have all heard the phrase, “No plan survives the first encounter with the enemy”. In this case, the student is the “enemy”.

By definition a student is inexperienced. Knowing what to say and when to say it is a key part of training in many domains. Consequently, students will use unexpected terminology. As described earlier, the ASR will return a most likely result from the available grammar. The student spoke an unsupported phrase. Incorrect recognition is likely (correct results can happen if the spoken phrase closely resembles a supported phrase and the confidence score setting permits acceptance). With experienced users, similar problems occur. Experienced users may have a tendency to adopt casual and locally specific terminology.

Accepting that fact, command validation can result in significant usability improvements. The objective of command validation is to prevent invalid commands from reaching the simulator.

What is Command Validation

Artificial Grammar Limitation methods use simulator information to restrict the phrase search. Similarly, the ASR result can be validated using this information. The following example demonstrates a student issuing an instruction to a simulated aircraft not active on frequency:

- The simulator recognizes the callsign and instruction

- The command validation component checks the callsign active on the frequency in use

- The instruction is rejected if the callsign is not on frequency.

The simulator may produce a response “say again” in older implementations of ASR. In ATC if you call an aircraft, not on frequency, silence is the appropriate response.

Command Validation and Artificial Grammar Limitations

Validations rules can be simple or sophisticated. The information provided by the simulator directs the level of sophistication possible. In the earlier routing example, command validation can still add value when using artificial grammar limitations. If the recognized beacon is determined not part of the route, the response system can be instructed to question the command to route to the incorrect beacon

Are Artificial Grammar Limitations a Good Idea?

Frankly, no unless your options are limited by ASR design. However, if you are forced to use this method, give some thought to complementing your design by using command validation. Of course, command validation adds expense, but the dramatic increase in usability and realism is worth every penny.